Come together: an exploration on social driving behaviour of automated vehicles

Abstract

Interaction between road users is a fundamental part of the traffic system. The advent of automated vehicles (AVs) has given rise to requirements for interactions between AVs and other road users, expressed in high-level terms like ‘demonstrate anticipatory behaviour’, ‘not confusing other road users’, and ‘being predictable and manageable for other road users’. Operationalizing these social driving behaviours requires social science knowledge on human interaction. However, translating social driving behaviour requirements unambiguously to the engineering domain necessitates that social scientists have a rudimentary understanding of the language of engineers (and vice versa). The present study seeks to accommodate interdisciplinary collaboration between social scientists and engineers by providing insight into current AV technological capabilities with regards to social driving behaviour and road safety, and their development in the near future. To this end, an exploratory interview study was performed with 7 engineers with backgrounds in industry, academia, research institutes, and/or vehicle authorities. The engineers provided several real-world examples of implications of AV algorithms on social driving behaviour. Thematic analysis of the interview transcripts resulted in clusters of themes relating to the product development process: requirements (i.e., societal, legal, customers), development (i.e., process, implementation), and evaluation (i.e., assessment, monitoring). Choices made in each of these phases appear to influence the final behaviour of automated vehicles in traffic. Knowledge on social driving behaviour and its impact on traffic safety can guide these choices to ensure safe operation of AVs within the social environment of traffic.

1. Introduction

The advent of automated vehicles (SAE level 3+) is predicted to change transportation on a global scale (Elvik, 2020; Hoogendoorn et al., 2014). The rapid improvements made to (semi-)automated vehicles and towards fully automated vehicles (AVs) are made possible due to technological and engineering solutions. These solutions could enable vehicles to travel from A to B autonomously. However, being a part of the traffic system requires more than the ability to control a vehicle; it also requires interaction with other road users, including vulnerable road users (VRUs) such as bicyclists and pedestrians, and human driven vehicles (Grahn et al., 2020; Markkula et al., 2020; Straub & Schaefer, 2019). These interactions between road users are based on formal regulations such as ‘yielding to traffic coming from the right’ (the rules of the road), but also on informal regulations based on societal norms and values, such as drivers voluntarily giving way to VRUs beyond their obligations. Drivers also use subtle cues to make clear what their intentions are to other road users such as timely and gradually slowing down when nearing a crosswalk to indicate to pedestrians they can cross the road safely. This social aspect of driving is a crucial element that is not always present in current automated driving solutions, whether in production vehicles or proposed in the literature (Brown & Laurier, 2017; Negash & Yang, 2023; Villadsen et al., 2023). The importance of the human and social aspects of driving for automated vehicles are being acknowledged by legislative bodies. For example, regulations on automated lane keeping systems (UNECE 2021) state that “the system shall aim to keep the vehicle in a stable lateral position inside the lane of travel to avoid confusing other road users” and regulations on advanced driver assistance steering systems (UNECE 2025) state that “A lane change procedure shall be predictable and manageable for other road users.”. However, which variations in lane position would actually confuse other road users and what constitutes a predictable and manageable lane change is still under discussion. The further development and specification of these requirements necessitates insight in human behaviours. Such insight is often the domain of the so-called ‘social sciences’ (i.e., psychology, sociology, linguistics, and other disciplines with a focus on human cognition, interaction and communication), but it is not always readily available or usable to other domains. Collaboration is therefore needed between social scientists and engineers to develop AVs that integrate seamlessly into a traffic system where social dynamics are crucial for managing and preventing conflicts. Yet, this collaboration comes with challenges (Jones & Jones, 2016; Lowe et al., 2013), and differences between the two fields create a so-called ‘epistemological gap’ both in terms of knowledge and approaches Hadfield-Hill et al. (2020). The aim of this study is to reduce this gap, by identifying the challenges engineers face when developing social aspects of AV driving behaviour and highlighting how social scientists can contribute.

1.1. Research question & approach

This study addresses the following research question: How can social scientists use their knowledge to improve social driving behaviour of automated vehicles?

Understanding the hurdles to implementing social behaviour in AVs is essential for integrating social science knowledge into the development of social driving behaviour. Therefore seven engineers working in the field of AV perception and decision making were interviewed about their insights in the development of algorithms and requirements for AVs regarding social driving behaviour. To convey these insights effectively to social scientists a strong focus was put on obtaining examples of related real-world behaviours. Such examples help bridge the gap between the obtained engineering insights and the perspective of social scientists, by creating a point of congruence (Hadfield-Hill et al., 2020). Furthermore the focus of this exploratory study was on getting a wide range of engineering insights, rather than interviewing a homogeneous group of participants. We first present the identified themes that arose during the interviews, each accompanied with the previously mentioned related real-world examples. We then use these results to explore how social scientists can apply their expertise to improve social driving behaviour of AVs.

2. Background

The perceived relevance of social sciences for automative engineering has been increasing, especially in research focusing on the vehicle interior. Multiple standards, e.g., ISO 9241-210:2019 (ISO, 2019), were developed to provide requirements and recommendations for human centred HMI designs. More recently there has been a growing interest in the interactions between road users, especially when AVs are part of the interaction (e.g., Brown & Laurier, 2017; Knoop et al., 2019; Madigan et al., 2019).

Previous work (e.g., Grahn et al., 2020) investigated how human drivers enable safe driving within the social environment on the road. Transferring these skills from human drivers to automated driving results in AVs that are more seamlessly integrated into the social traffic system, likely improving safety and efficiency as a result (Straub & Schaefer, 2019).

2.1. Different approaches

The approach to investigating the interactions between human drivers and AVs appears to differ substantially between engineers and social scientists. An engineering perspective requires well-defined descriptions of the behaviour that needs to be performed, and when to perform this behaviour or when not to. Engineering studies often aim to provide an approach to solving the described interaction, often in the form of an algorithm or set approach. Defining human behaviour into a more precise, often mathematical description results into what is also known as a behavioural specification (Bin-Nun et al., 2022). These behavioural specifications define intended behaviour in a way that AVs can implement, similar to how traffic laws guide human drivers. Traffic laws, written from a social science perspective, allow for driver judgment and adaptation based on the circumstances, often using broad terms like “when safe” or “when appropriate.” In contrast, behavioural specifications follow a precise, mathematical approach, reflecting the differing perspectives of social scientists and engineers.

A clear example of differing perspectives is an AV approaching a pedestrian crossing. Engineers require precise braking parameters to achieve socially desirable interactions (e.g., Lee et al., 2017). Social science research on pedestrian crossings offers valuable insights (e.g., Beggiato et al., 2018; Razmi Rad et al., 2020; Tian et al., 2023), but lacks the detail needed for direct implementation, creating a gap between knowledge and engineering application.

2.2. Social driving behaviour

In a broad context, Schmitt (1998) defines social behaviour as: “a person’s behaviour is social when its causes or effects include the behaviour of others”. It is important to note that this definition is ‘value free’, meaning that social behaviour as defined by Schmitt could manifest as pro-social and anti-social behaviour. An example of pro-social behaviour in a driving context is the granting of right-of-way for another driver even if not required to do so by law, while an anti-social example could be cutting in front of another driver. Some aspects of social behaviour can be considered as intended behaviours while there are also behaviours that influence others without the intention to do so. An example of intended social behaviour in a driving context is the use of vehicle indicators to communicate a desire to change lanes to other road users. An example of unintended behaviour that influences others could be the braking pattern of a vehicle. Even if the braking pattern is solely chosen to brake at a set position it still communicates information about intent and future position to other road users, whose state, behaviour and goals could be influenced by it. For example, braking early and gradually for a pedestrian crossing also communicates the intent to stop to any waiting pedestrians, while braking late and suddenly could be interpreted by waiting pedestrians as unwillingness to stop or a failure to notice them (Tian et al., 2023). Additionally, not accounting for unexpected braking patterns may result in rear end crashes (Favarò et al., 2017). Therefore, unintended influences of AV behaviour should be taken into account when developing AVs. Other examples of unintended consequences of AV behaviour are slow driving and the adoption of a large following distance causing frustration in other drivers resulting in dangerous manoeuvres (Knoop et al., 2019) and the miscommunication in merging scenarios due to unusually large gaps left by an AV (Brown & Laurier, 2017). Aspects of social behaviour can be proactive or reactive, with proactive behaviours occurring as anticipation to situation that has not manifested yet (e.g., moving to the right-most lane early on the highway in preparation for an upcoming offramp or slowing down further when in a school zone around the time schools end) and reactive behaviours only occurring when a situation presents itself (e.g., braking in response to another vehicle using indicator lights).

Based on the above, a preliminary value-free definition of social driving behaviour was prepared for the interview study that attempts to unite both engineering and social scientist perspectives. We define social driving behaviour as: driving behaviour that directly or indirectly influences and/or takes into account other road users, e.g. their state, behaviour and goals.

2.3. Primer on AV design

A condensed description of AV design follows to facilitate a basic understanding of the engineering perspective on social driving behaviour.

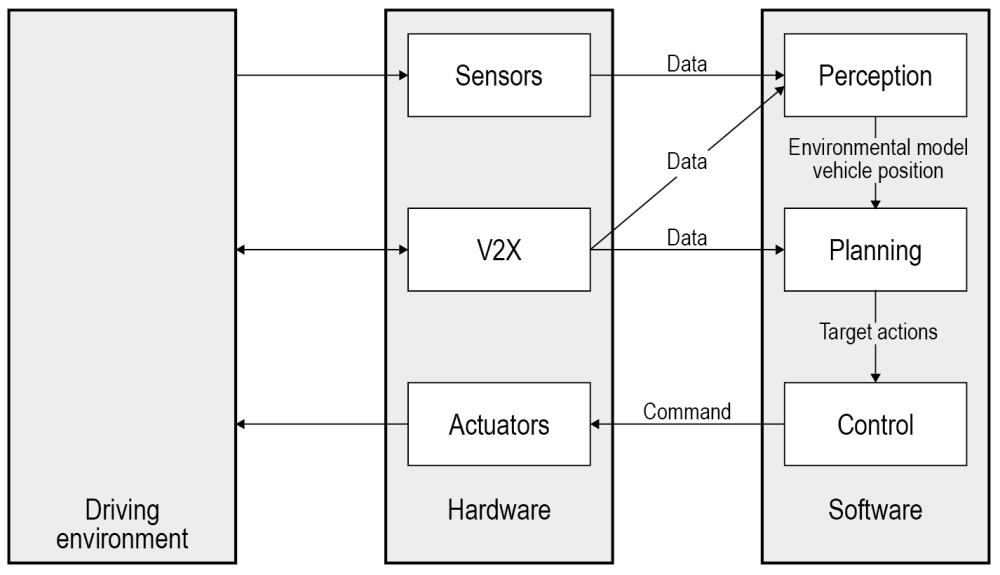

According to Pendleton et al. (2017), AVs interact with the driving environment through hardware, involving sensors (e.g., radar), actuators (e.g., a steering system), as well as communication with other vehicles or infrastructure (e.g., vehicle-to-Everything, V2X), see Figure 1. Within the software realm, Pendleton et al. (2017) discerns three subsystems: perception, planning, and control. Algorithms in the perception subsystem make use of sensor and V2X data to perceive and recognize objects in the environment as well as the ego vehicle’s state (e.g. the own speed). For example, a radar sensor may report distances to objects, as well as changes in these distances, while the algorithms translate this data into specific other road users or objects and their states and characteristics. One step further, these algorithms can also predict possible future states of surrounding objects. Consequently, a model of the vehicle position in relation to its environment is created. Next, the planning subsystem uses this information and plans target actions for the vehicle. The planning subsystem utilizes a number of factors to determine what target actions to take. This is done by a so called ‘cost function’: a mathematical formula that combines a number of different factors with weights to provide a ‘cost’ for each option in a set of candidate target actions. The higher the ‘cost’, the less desirable that specific candidate action becomes. Factors could relate to for example travel time; comfort of the driver; fuel efficiency; safety. By optimizing for the lowest cost (or highest benefit), these factors and their weights help a vehicle determine what decision to make and what to avoid. Different types of ‘planners’ are described by Pendleton et al. (2017), reminiscent of Michon’s (1985) hierarchical levels of driving: the mission planner determines, e.g., that the vehicle drives from A to B, whereas the behavioural planner determines, e.g., when to perform an overtaking manoeuvre, and the motion planner determines the exact desired motion path, including positions and velocities at each time step. Finally, execution of the motion path takes place in the control subsystem. The implementation of these functions may differ between vehicles and manufacturers.

3. Method

A semi-structured interview study was conducted to gain insight into current AV technological capabilities with regards to social driving behaviour and road safety, and their development in the near future. A thematic analysis (Braun & Clarke, 2006) was performed on the interview results.

3.1. Participants

Prospective participants were identified through web search (and follow-up inquiries within the resulting companies and institutes) and connections within the network of the authors. They were subsequently selected based on their experience with the development of AVs (e.g., based on published papers). A total of 12 prospective participants were contacted directly through an e-mail, which detailed the purpose of the study and the request to participate in an interview. Seven of them (all males, age range 35-49 years, M = 41.8, SD = 4.8) replied positively and agreed to participate anonymously, covering backgrounds ranging from academia and research institutes to automotive industry and vehicle authorities (see Table 1). At the time of the interview, participants were working across three European countries (Germany, Netherlands, Sweden), with backgrounds within and outside Europe. Experience across the participants included object detection, object tracking, object path prediction, motion planning, decision making, safe driving policies, safety acceptance criteria, modelling human driving behaviour, and cooperation between AVs and infrastructure, pedestrians, and conventional vehicles. Ethical approval for this study was obtained from the SWOV ethics committee.

| Pp | Role | Aca | Ind | Res | Pp |

| 1 | Employee automotive supplier of AV software | X | 1 | ||

| 2 | Assistant professor computer vision and perception (previously: robotaxi company) | X | (X) | 2 | |

| 3 | Associate professor computer vision, perception and prediction (previously: automotive company) | X | (X) | 3 | |

| 4 | Leader of the group for cooperative system automation and integration | X | 4 | ||

| 5 | Chief inspector | 5 | |||

| 6 | Research scientist and manager AV behaviour and motion planning | (X) | X | 6 | |

| 7 | Assistant professor / Senior research scientist AV safe and social interactions | X | X | 7 |

3.2. Materials

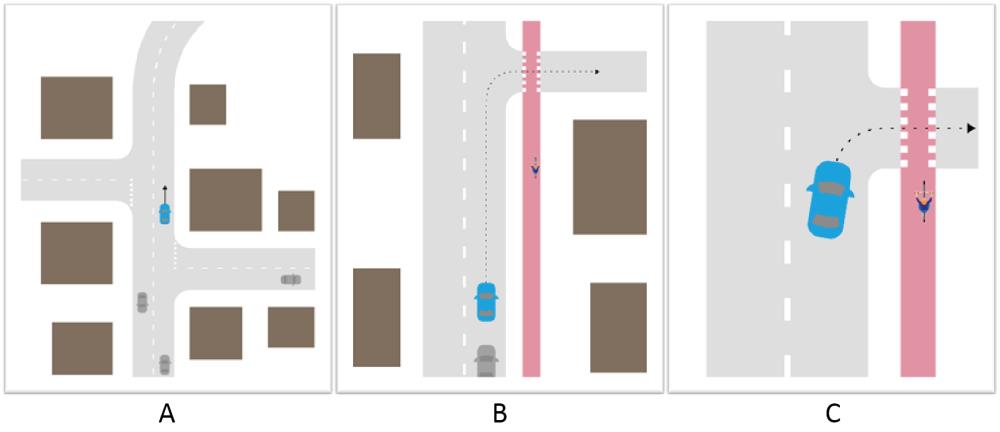

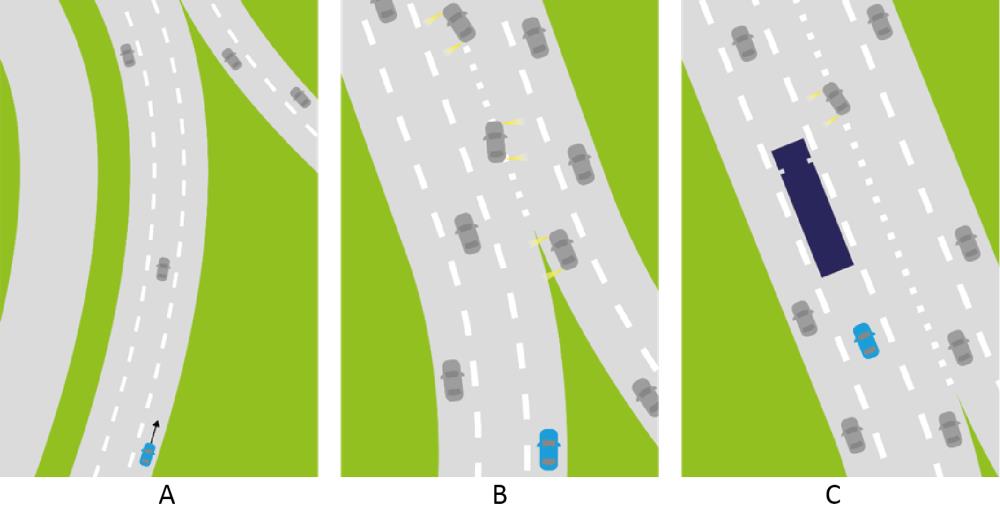

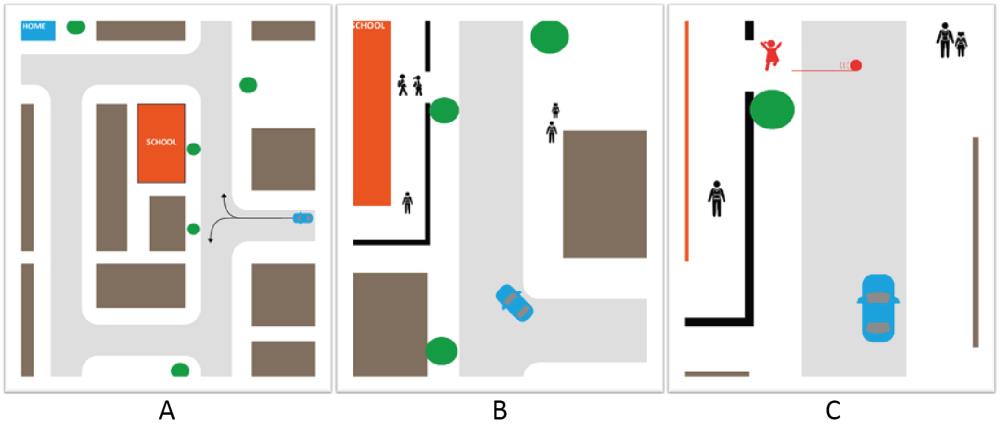

The study consisted of two parts: an online survey and a semi-structured interview. The survey gathered background information (e.g., age, profession, experience with AV development) on participants and sensitized them to the topic of social driving behaviour for a more fruitful interview. Three scenarios were prepared for the survey: a right-angle conflict with a bicycle, merging on a highway, and approaching a school (see Appendix A). Each illustrated scenario consisted of three consecutive steps in time, zooming in from the overall traffic context to specific interactions between an ego vehicle and other road users. Each step was accompanied by a set of three questions asking which driver behaviour is required to avoid a conflict, which cues facilitate such behaviour, and if one’s answer would differ if the ego vehicle would have been an AV. The scenarios fostered reflection on interactions between AVs and other road users before ultimately raising the question on how social driving behaviour should be defined from the perspective of an AV.

A semi-structured interview was prepared to ensure the same topics and terminology were used for all participants. The research question was transformed into interview questions using the guidelines described in (Kumar, 2011). Four main points were addressed (see also Appendix B): 1) social aspects of driving behaviour (e.g., definition of social driving behaviour, example scenarios), 2) the development process (e.g., requirements, algorithms, limitations, updates and improvements), 3) the relation between social driving behaviour and traffic safety, and 4) challenges on realizing potential benefits of social driving behaviour. An interview template was created by interleaving the interview questions with answer fields and pointers for the interviewer to ask for additional details based on the answers provided by the interviewees. The understandability of the survey and interview questions, as well as the general flow of the interview were piloted with a human factors researcher with a focus on AV technology.

3.3. Procedure

A link to the online survey was sent in the week of the interview. To shorten the duration, for each participant two out of three scenarios were randomly selected and included in the survey. All surveys were filled out by the time the interviews were conducted. The interviews were held online using Microsoft Teams in November 2023, with a duration of approximately one hour. Participants were again informed about the purpose of the study, asked if they prefer to be anonymous, and asked how they should be referred to in subsequent reporting. With their consent, the interviews were recorded to facilitate analysis by the authors of the present paper. Next, open-ended semi-structured interview questions were posed to allow the participants to share their views in their own terms. When needed, follow-up questions were asked to ensure participants’ answers were interpreted correctly. When participants seemed to have difficulty providing examples of social driving behaviour, previous answers relating to the selected survey scenarios were used in the interviews to facilitate discourse. Assisted by the interview template, all interviews were conducted by author RJJ, while the authors DC and RdZ made notes. Any additional questions based on these notes were asked by the end of the interview. During interviews, participants frequently provided elaborate answers not only in relation to the original interview question, but also relating to an interview question not yet asked. In such cases the latter question was skipped.

3.4. Data logging & analysis

A thematic analysis was performed in line with the phases described in Braun and Clarke (2006). The main purpose of this analysis was to provide a ‘rich description’ of the data set, in which themes are identified at the ‘semantic level’. A rich description means an accurate reflection of the content of the entire data set where all statements are related to a theme (implying that themes can be based on a subset of the participants), and interpretation at the semantic level means that themes are identified within the explicit or surface meanings of the data (cf. Braun and Clarke (2006)).

Directly after the interviews both note-takers scanned through their notes for relevant statements that were made during the interviews (phase 1: familiarization). In case of unclear notes, the interview recording was consulted. All statements were gathered in a spreadsheet and categorized according to the original interview questions. Initial codes referring to interesting features of each statement were assigned bottom-up (phase 2: generating initial codes). Next, two of the authors individually identified themes by looking at statements with common codes (phase 3: searching for themes).

A joint session focused on the resulting set of themes (phase 4: reviewing the themes). Themes identified by both authors were selected straightaway, whereas themes identified by one author were first discussed with the other author and selected only if the theme did not overlap with previously selected themes. A thematic map was created to identify how themes relate to each other, to ensure there is not too much overlap between themes, and to identify sub-themes. An initial description of each (sub-)theme was formulated (phase 5: defining and naming themes). Finally, statements capturing the essence of a theme were selected, and a description of the theme was prepared (phase 6: producing the report). For each theme, concrete examples were provided through text boxes to make the theme more tangible for social scientists (as recommended by Hadfield-Hill et al. (2020)). Typically, summaries of the actual verbatim were created, in part to reduce the length of the (at times elaborate verbatim), and in part to improve readability, given the fact that examples were frequently interrupted by other examples or trains of thought. A draft version of the results section was presented to the participants, with the question to indicate whether the interpretation of their statements (including aforementioned summaries of the verbatim) corresponded with the original intention of the statements. All participants provided feedback, and the results section was revised accordingly.

4. Results

Statements made by specific participants are hereafter referred to by their participant number (e.g., ‘P1’, see Table 1 for more information on the participants).

4.1. Defining and framing social driving behaviour

In the questionnaire, participants were asked to provide their own definition of social driving behaviour. These definitions generally contained the following terminology: anticipation, social norms, social context, interactions, politeness, communication, social interaction, interpretation of behaviour and common understanding of behaviour in traffic. Most definitions were highlighting the positive aspects of social driving behaviour. At the start of the interview participants were shown our value-free definition of social aspects of driving behaviour: “driving behaviour that directly or indirectly influences and/or takes into account other road users, i.e. their state, behaviour or goals”. Where social driving behaviour can be anywhere on a continuous scale from anti-social to pro-social, i.e., from disrupting to facilitating other’s state, behaviour, and/or goals. All participants understood and agreed to adopt this definition for the remainder of the interview.

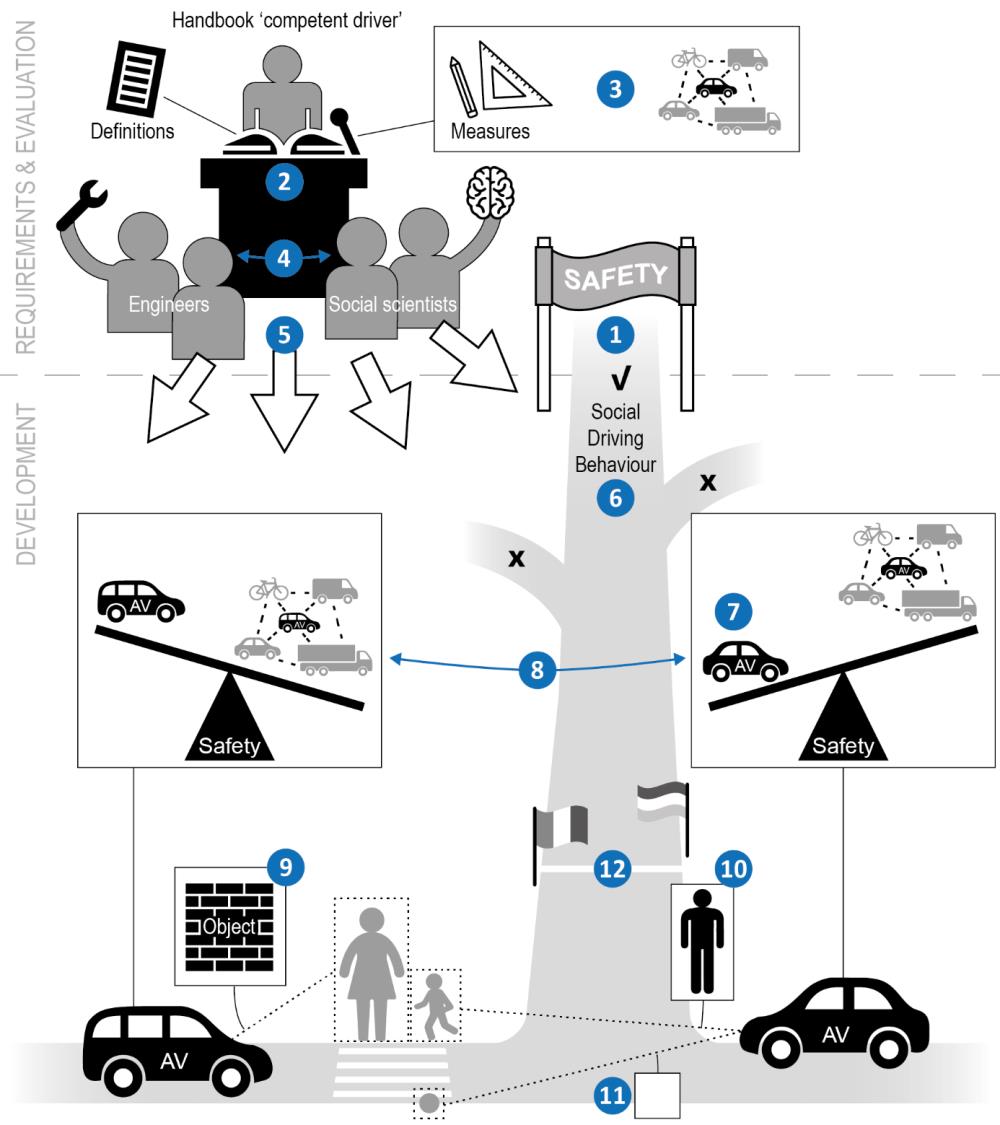

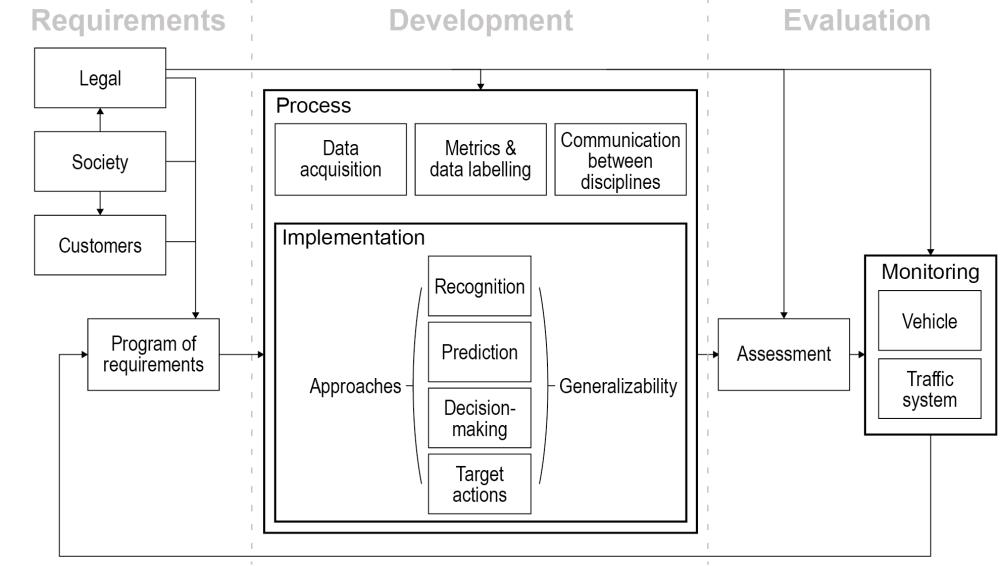

The remarks made by participants during the interview related to a wide range of themes throughout the development process, which eventually provided the basis for the thematic map shown in Figure 2. The process consists of three phases: requirements, development and evaluation. Within these phases several themes are represented by blocks. In the following paragraphs each theme is presented in more detail.

4.2. Process phase I: Requirements

Requirements for development follow from stakeholder input across several domains (legal, society, customers), as well as from industry itself.

4.2.1. Legal

Legislation was named multiple times as a way to create incentive for the automotive industry to implement or take into account social aspects of driving behaviour (P2, P4, P7). In this regard, also the requirement in the ALKS legislation (UNECE 2021) about minimizing risks to at least the level of a careful and competent human driver was mentioned. The main challenge here is how do you prove that you adhere to this requirement, i.e., how is it technically defined and measured (P5, P6, P7)?

Another requirement that was mentioned was the transparency of the algorithms (P2, P3). This can influence the choice of model approach, i.e., black or white box. Also, legislation regarding exceptional road users, such as funeral processions and blind people, was mentioned as difficult to deal with for AVs (P2).

Legislation was also mentioned as a means to support AVs, such as counteracting bullying of AVs (P2).